How to use AI to personalize shopping experiences in Europe and stay compliant in 2026?

Written by

Kinga EdwardsPublished on

Learn how European retailers can use AI to personalize shopping experiences while staying compliant with the EU AI Act in 2026. Practical, simple guidance.

We all know shoppers want convenience. Fast delivery, fair prices, and a buying journey that feels personal. Surprise, surprise – you can use AI to make that happen. It can shape product feeds, emails, and in-store journeys around one person instead of “the average customer.”

Yet, at the same time, Europe is tightening the rules around how AI works in the background. The EU AI Act is rolling out between 2025 and 2027, with bans on some uses already in force and stricter rules for high-risk systems on the way. For retailers and brands, the message is simple: you can keep using AI to personalize shopping experiences, but you need structure, documentation, and transparency.

Quick refresher: what counts as “AI to personalize shopping experiences”?

Let’s keep the definition short. AI to personalize shopping experiences means systems that adapt what people see based on data, such as:

- Product recommendations on your homepage or checkout.

- Smart search that ranks results per visitor.

- Dynamic content blocks and emails that change per user.

Some brands add AI chatbots or virtual stylists that adjust content based on the person in front of them. Under the hood, this can be classic machine learning, large language models, or AI features inside your ecommerce platform.

In Europe, all this sits on top of GDPR and cookie consent. The AI Act adds a new layer focused on safety, transparency, and risk to people’s rights. Most retail use cases are low risk, but you must still know where AI appears, what it does, and how you control it.

EU AI Act in plain language: what retailers actually need to know

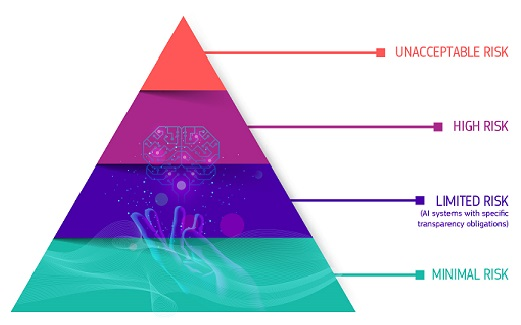

The AI Act is the first broad legal framework for AI worldwide. It uses four risk levels for AI systems: unacceptable, high, limited, and minimal. The higher the potential harm, the stricter the rules.

Unacceptable risk systems are banned. That covers things like social scoring or systems that exploit vulnerable people. Personalization in commerce should never get close to that line.

High-risk systems are allowed but heavily regulated. They need risk management, documentation, logging, human oversight, and sometimes third-party checks. Retail AI becomes high-risk if you use it to decide who gets a loan, a job, or another essential benefit.

Most AI for personalizing shopping experiences sits in the limited or minimal risk buckets. That still means duties around transparency and fair use, especially when people interact with chatbots or see AI-generated content. Bans on unacceptable practices already apply in 2025, and rules for high-risk and transparency roll in up to 2027.

Data foundation: collect and use customer data without getting into trouble

Every personal experience starts with data, but you do not need to track everything. For AI to personalize shopping experiences, most teams work with three main groups:

- Behavior: pages viewed, clicks, carts, purchases.

- Preferences: favourites, size and brand choices, quiz answers.

- Context: device, rough location, time of day, language.

That is usually enough to make shopping feel tailored. The AI Act and privacy rules expect clear purpose limitation and data minimisation. If a data point does not improve the experience in a clear way, skip it.

Map which data fields feed each AI feature. For every field, ask, “What benefit does this create for the shopper?” If you cannot name one, remove or anonymise it.

Then, connect your sources into a single view with solid governance. You should know where data comes from, how consent was collected, how long you keep it, and how someone can see or delete it. Having this written down will help once transparency and documentation checks become more common.

Safe-by-design personalization: use cases that delight shoppers and stay low-risk

#1 Smarter discovery and search

Discovery is one of the safest places to use AI to personalize shopping experiences. Instead of a static category page, you can reorder products based on past browsing, filters, and purchases. Fans of outdoor gear see that first. Parents see kids’ items near the top.

AI-powered search can adapt in the same spirit. If a regular buyer always picks vegan or fragrance-free products, search can gently push these higher. The key is that all products stay reachable. You are guiding attention, not hiding options.

You can also tweak onsite content. First-time visitors might see clear fit guides, while returning buyers see more styling tips or bundles.

#2 AI-powered recommendations that don’t creep people out

Recommendations are the classic playground for AI to personalize shopping experiences. Models can use viewed items, similar shoppers, frequently bought-together products, or recent trends.

From a shopper’s perspective, the difference between helpful and creepy is context. A small hint like “Based on what you viewed this week” makes the carousel feel honest. Ultra-personal suggestions with no explanation can feel unsettling.

Avoid sensitive inferences. Do not let a model guess health, religion, or political views from behaviour. This is risky ethically and can push you towards the high-risk area of the AI Act when it shapes access to financial or essential services.

Keep your focus on clear commercial signals: clicks, carts, ratings, returns. Use them to improve upsells on product pages, cross-sells in cart, and re-engagement emails that feel like real help, not surveillance.

#3 Content, offers, and merchandising

AI can also help decide which banners, headlines, and offers each person sees. New visitors might get a nudge to sign up. Loyal customers might see points, perks, or early access.

The same logic works for merchandising. Models highlight items with a higher match probability for each segment while still respecting stock and margin rules. The goal is to make it easier for people to spot what fits them, not to trick them into something else.

Why does transparency matter?

Transparency is one of the easiest wins for both compliance and trust. The AI Act expects people to know when they interact with AI systems or consume certain types of AI-generated content.

Start with small changes. Label your support chatbot as an AI assistant. Add a one-line explanation under recommendation carousels to go beyond chatbots. Mark AI-generated descriptions or visuals when they matter for a purchase decision.

For more complex setups, create a simple “How we personalise your experience” page. Explain what AI does on your site, which data types you use, and how someone can change preferences or switch parts of it off.

Build lightweight oversight that fits retail

You don’t need a huge compliance department to use AI to personalize shopping experiences safely. What you need is clarity. Set one place where all AI use cases are listed, along with their purpose, data inputs, and level of human oversight. This simple inventory aligns with the coming expectations around transparency and internal documentation. It also helps teams avoid accidental overlap or risky experiments.

Create a small review group (product, legal, data, and marketing) that meets regularly. Their job is to check new ideas, flag risks early, and keep everything consistent with GDPR and the AI Act. A few written rules go a long way: keep humans in the loop for anything that touches sensitive outcomes, record how your models learn, and update risk notes as features change.

Moreover, clear ownership helps teams move fast without creating blind spots. Assign a product owner for each AI-driven feature. They’re responsible for understanding how that feature works, what data it uses, and how decisions are made. Add basic logging for actions that influence customers, like why a model recommended one product over another.

These logs support internal troubleshooting and meet the Act’s expectations for traceability, but they don’t need to be burdensome.

The goal is simple: if someone asks “why did the system show this?”—you can answer.

How to do risk checks for new AI features?

When a new use case appears, scan for the basics:

- Could this affect access to a benefit or major decision? If yes, stop.

- Is the data needed clearly tied to improving the shopping experience?

- Does the feature tell people when they’re interacting with AI?

- Is the output fair, explainable, and reversible?

These checks take minutes once the process is set. They keep your AI to personalize shopping experiences in the safe “limited risk” territory.

In-store and omnichannel worlds

Physical stores across Europe are getting smarter, and shoppers expect consistency across all touchpoints. AI helps connect the dots. When done well, customers get a smoother journey without feeling tracked.

Use in-store AI for practical improvements, like digital shelves that highlight relevant items or kiosks that recall someone’s size history when they log in. Keep it opt-in and transparent. Avoid real-time biometric recognition, which falls into banned practices. The safest path is using anonymized patterns instead of individual identity.

Bring your online personalization logic into stores only when people actively participate—scanning app QR codes, logging into their profile, or using a loyalty card. This gives you the context you need to make the experience helpful without crossing any regulatory lines.

Personal shopping assistants and kiosks

Smart kiosks can support shoppers by showing personalised product suggestions, size guidance, or outfit builders. These tools work best when the shopper chooses to engage, because this creates a clean consent trail.

Linking online wishlists to in-store touchpoints can improve the experience without additional data collection. Everything feels connected, but the system stays compliant.

Inventory and fulfilment optimisation

You can use AI to personalize shopping experiences at the logistics level too. Smart forecasting makes sure stores stock sizes and colours relevant to their neighbourhood. Models adjust local assortments based on previous buying patterns—keeping everything anonymous. It lowers risk and improves sustainability by avoiding unnecessary overstock.

Examples you can use even today

Start with your homepage: adapt banners, recommendations, and categories to returning visitors. Then expand to search results, email timing, and product detail pages. Each small upgrade compounds into a meaningful experience, without any jump into high-risk AI territory.

Smarter product feeds

Reorder category pages based on past browsing and purchase behaviour. Keep everything visible, but let the top results feel more aligned. This makes navigation quicker and reduces friction for shoppers who want to find “their style” faster.

AI-guided search refinement

If someone keeps clicking sustainable materials, cruelty-free beauty, or minimalist furniture, search can reflect those preferences. The key is subtlety: don’t lock people into a narrow bubble. Let them override or reset filters anytime.

Personalised bundles

Bundles can adapt to someone’s pattern—frequent movers might see home-organising sets, while fashion buyers see curated outfits. Dynamic bundling increases value without needing sensitive data.

Ethical personalization

You want customers to feel respected, not analysed.

You want recommendations to help, not manipulate.

And you want your AI to personalize shopping experiences to stay far from anything that touches protected characteristics.

Check for bias by testing how recommendations behave across age groups, regions, and new customers with minimal data. Use model explanations to confirm that suggestions come from behaviour, not accidental proxies. Keep all outputs reversible and inspectable.

Bias checks for retail teams: Retail teams can do simple tests: create sample profiles, simulate journeys, and compare results. If the system steers different groups in overly narrow directions, adjust the model or its training data.

“Fairness” means a lot in commerce: Fairness in retail AI is about choice. Everyone should see the full assortment. Personalization narrows focus, but never access. Always give people a reset option, and avoid any segmentation linked to sensitive traits.

Last words

2026 is the moment when transparency rules and high-risk controls fully land for many systems. Retailers using AI to personalize shopping experiences should treat the next months as a setup phase. A bit of structure now saves a lot of work later.

Start by listing where AI appears today. Add a one-page risk note for each feature. Create or update your transparency statements. Align your consent model with GDPR and the Act’s expectations. Then, decide how you want to grow—more adaptive search, more dynamic content, better recommendations, or in-store personalization?

Set rules for how models learn and how often they update. Keep a human fallback for high-impact interactions, like service decisions or refunds. Write a short policy on AI-generated content and how you label it.

These safeguards show regulators that your approach is controlled, intentional, and safe—even as your personalization becomes more intelligent.